How To Configure High-Availability Cluster on CentOS 7 / RHEL 7

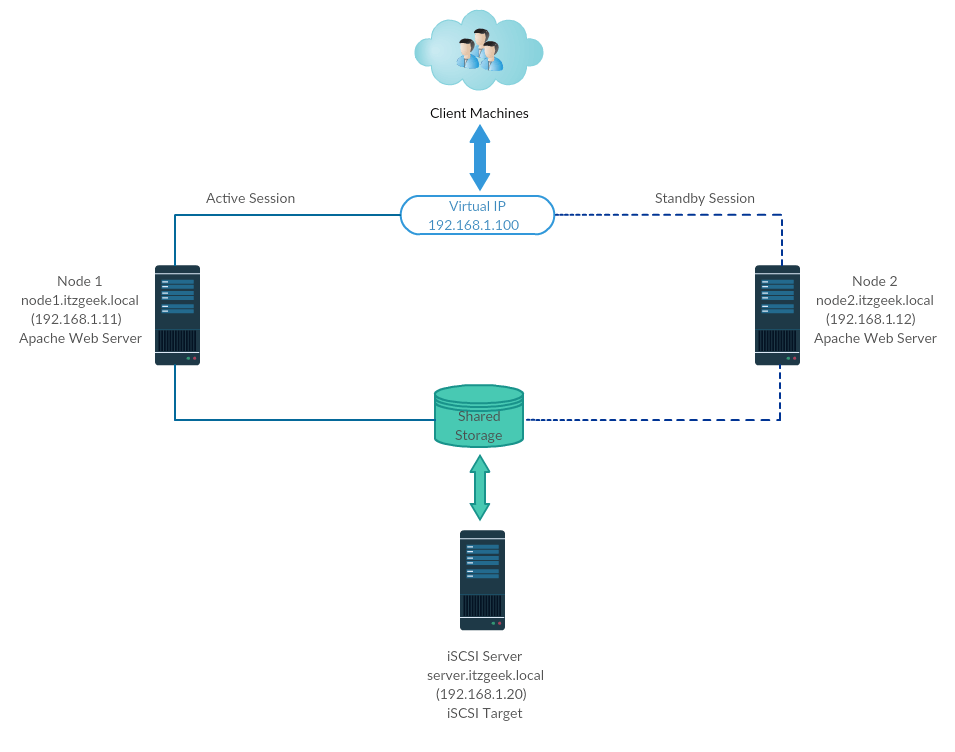

High-Availability cluster aka Failover-cluster (active-passive cluster) is one of the most widely used cluster types in the production environment. This type of cluster provides you the continued availability of services even one of the cluster nodes fails. If the server running application has failed for some reason (hardware failure), cluster software (pacemaker) will restart the application on another node.

High-Availability is mainly used for databases, custom application, and also for file sharing. Fail-over is not just starting an application. It has some series of operations associated with it like, mounting filesystems, configuring networks and starting dependent applications.

Environment

CentOS 7 / RHEL 7 supports Fail-over cluster using the pacemaker. Here, we will be looking at configuring the Apache (web) server as a highly available application.

As I said, fail-over is a series of operations, so we would need to configure filesystem and networks as a resource. For a filesystem, we would be using a shared storage from iSCSI storage.

| Host Name | IP Address | OS | Purpose |

|---|---|---|---|

| node1.itzgeek.local | 192.168.1.11 | CentOS 7 | Cluster Node 1 |

| node2.itzgeek.local | 192.168.1.12 | Cluster Node 1 | |

| server.itzgeek.local | 192.168.1.20 | iSCSI Shared Storage | |

| 192.168.1.100 | Virtual Cluster IP (Apache) | ||

All are running on VMware Workstation.

Shared Storage

Shared storage is one of the important resources in the high-availability cluster as it holds the data of a running application. All the nodes in a cluster will have access to shared storage for recent data. SAN storage is the most widely used shared storage in the production environment. For this demo, we will configure a cluster with iSCSI storage for a demonstration purpose.

Install Packages

iSCSI Server

[root@server ~]# yum install targetcli -y

Cluster Nodes

It’s the time to configure cluster nodes to make use of iSCSI storage, perform below steps on all of your cluster nodes.

# yum install iscsi-initiator-utils -y

Setup Disk

Here, we will create 10GB of LVM disk on the iSCSI server to use as shared storage for our cluster nodes. Let’s list the available disks attached to the target server using the command.

[root@server ~]# fdisk -l | grep -i sd

Output:

Disk /dev/sda: 107.4 GB, 107374182400 bytes, 209715200 sectors

/dev/sda1 * 2048 1026047 512000 83 Linux

/dev/sda2 1026048 209715199 104344576 8e Linux LVM

Disk /dev/sdb: 10.7 GB, 10737418240 bytes, 20971520 sectors

From the above output, you can see that my system has 10GB of hard disk (/dev/sdb). Create an LVM with /dev/sdb (replace /dev/sdb with your disk name)

[root@server ~]# pvcreate /dev/sdb [root@server ~]# vgcreate vg_iscsi /dev/sdb [root@server ~]# lvcreate -l 100%FREE -n lv_iscsi vg_iscsi

Create Shared Storage

Get the nodes initiator’s details.

cat /etc/iscsi/initiatorname.iscsi

Node 1:

InitiatorName=iqn.1994-05.com.redhat:b11df35b6f75

Node 2:

InitiatorName=iqn.1994-05.com.redhat:119eaf9252a

Enter below command to get an iSCSI CLI for an interactive prompt.

[root@server ~]# targetcli

Output:

targetcli shell version 2.1.fb46 Copyright 2011-2013 by Datera, Inc and others. For help on commands, type 'help'. /> cd /backstores/block /backstores/block> create iscsi_shared_storage /dev/vg_iscsi/lv_iscsi Created block storage object iscsi_shared_storage using /dev/vg_iscsi/lv_iscsi. /backstores/block> cd /iscsi /iscsi> create Created target iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5. Created TPG 1. Global pref auto_add_default_portal=true Created default portal listening on all IPs (0.0.0.0), port 3260. /iscsi> cd iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5/tpg1/acls /iscsi/iqn.20...ad5/tpg1/acls> create iqn.1994-05.com.redhat:b11df35b6f75 << Initiator of Node 1 Created Node ACL for iqn.1994-05.com.redhat:b11df35b6f75 /iscsi/iqn.20...ad5/tpg1/acls> create iqn.1994-05.com.redhat:119eaf9252a << Initiator of Node 1 Created Node ACL for iqn.1994-05.com.redhat:119eaf9252a /iscsi/iqn.20...ad5/tpg1/acls> cd /iscsi/iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5/tpg1/luns /iscsi/iqn.20...ad5/tpg1/luns> create /backstores/block/iscsi_shared_storage Created LUN 0. Created LUN 0->0 mapping in node ACL iqn.1994-05.com.redhat:119eaf9252a Created LUN 0->0 mapping in node ACL iqn.1994-05.com.redhat:b11df35b6f75 /iscsi/iqn.20...ad5/tpg1/luns> cd / /> ls o- / ...................................................................................................... [...] o- backstores ........................................................................................... [...] | o- block ............................................................................... [Storage Objects: 1] | | o- iscsi_shared_storage ........................... [/dev/vg_iscsi/lv_iscsi (10.0GiB) write-thru activated] | | o- alua ................................................................................ [ALUA Groups: 1] | | o- default_tg_pt_gp .................................................... [ALUA state: Active/optimized] | o- fileio .............................................................................. [Storage Objects: 0] | o- pscsi ............................................................................... [Storage Objects: 0] | o- ramdisk ............................................................................. [Storage Objects: 0] o- iscsi ......................................................................................... [Targets: 1] | o- iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5 ....................................... [TPGs: 1] | o- tpg1 ............................................................................ [no-gen-acls, no-auth] | o- acls ....................................................................................... [ACLs: 2] | | o- iqn.1994-05.com.redhat:119eaf9252a ................................................ [Mapped LUNs: 1] | | | o- mapped_lun0 ............................................... [lun0 block/iscsi_shared_storage (rw)] | | o- iqn.1994-05.com.redhat:b11df35b6f75 ............................................... [Mapped LUNs: 1] | | o- mapped_lun0 ............................................... [lun0 block/iscsi_shared_storage (rw)] | o- luns ....................................................................................... [LUNs: 1] | | o- lun0 ...................... [block/iscsi_shared_storage (/dev/vg_iscsi/lv_iscsi) (default_tg_pt_gp)] | o- portals ................................................................................. [Portals: 1] | o- 0.0.0.0:3260 .................................................................................. [OK] o- loopback ...................................................................................... [Targets: 0] /> saveconfig Configuration saved to /etc/target/saveconfig.json /> exit Global pref auto_save_on_exit=true Last 10 configs saved in /etc/target/backup/. Configuration saved to /etc/target/saveconfig.json [root@server ~]#

Enable and restart the target service.

[root@server ~]# systemctl enable target [root@server ~]# systemctl restart target

Configure the firewall to allow iSCSI traffic.

[root@server ~]# firewall-cmd --permanent --add-port=3260/tcp [root@server ~]# firewall-cmd --reload

Discover Shared Storage

On both cluster nodes, discover the target using below command.

# iscsiadm -m discovery -t st -p 192.168.1.20

Output:

192.168.1.20:3260,1 iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5

Now, login to the target with the below command.

# iscsiadm -m node -T iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5 -p 192.168.1.20 -l

Output:

Logging in to [iface: default, target: iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5, portal: 192.168.1.20,3260] (multiple) Login to [iface: default, target: iqn.2003-01.org.linux-iscsi.server.x8664:sn.518a1f561ad5, portal: 192.168.1.20,3260] successful.

Restart and enable the initiator service.

# systemctl restart iscsid # systemctl enable iscsid

Setup Cluster Nodes

Host Entry

Make a host entry on each node for all nodes. The cluster will be using the hostname to communicate with each other.

# vi /etc/hosts

Host entries will be something like below.

192.168.1.11 node1.itzgeek.local node1 192.168.1.12 node2.itzgeek.local node2

Shared Storage

Go to all of your nodes and check whether the new disk is visible or not. In my nodes, /dev/sdb is the disk coming from our iSCSI storage.

# fdisk -l | grep -i sd

Output:

Disk /dev/sda: 107.4 GB, 107374182400 bytes, 209715200 sectors

/dev/sda1 * 2048 1026047 512000 83 Linux

/dev/sda2 1026048 209715199 104344576 8e Linux LVM

Disk /dev/sdb: 10.7 GB, 10733223936 bytes, 20963328 sectors

On any one of your node (Ex, node1), create a filesystem for the Apache web server to hold the website files. We will create a filesystem with LVM.

[root@node1 ~]# pvcreate /dev/sdb [root@node1 ~]# vgcreate vg_apache /dev/sdb [root@node1 ~]# lvcreate -n lv_apache -l 100%FREE vg_apache [root@node1 ~]# mkfs.ext4 /dev/vg_apache/lv_apache

Now, go to another node and run below commands to detect the new filesystem.

[root@node2 ~]# pvscan [root@node2 ~]# vgscan [root@node2 ~]# lvscan

Finally, verify the LVM we created on node1 is available to you on another node (Ex. node2) using below command.

[root@node2 ~]# lvdisplay /dev/vg_apache/lv_apache

Output: You should see /dev/vg_apache/lv_apache on node2.itzgeek.local

--- Logical volume --- LV Path /dev/vg_apache/lv_apache LV Name lv_apache VG Name vg_apache LV UUID mFUyuk-xTtK-r7PV-PLPq-yoVC-Ktto-TcaYpS LV Write Access read/write LV Creation host, time node1.itzgeek.local, 2019-07-05 08:57:33 +0530 LV Status available # open 0 LV Size 9.96 GiB Current LE 2551 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:3

Install Packages

Install cluster packages (pacemaker) on all nodes using below command.

# yum install pcs fence-agents-all -y

Allow all high availability application on the firewall to have proper communication between nodes. You can skip this step if the system doesn’t have firewalld installed.

# firewall-cmd --permanent --add-service=high-availability # firewall-cmd --add-service=high-availability

Use below command to list down the allowed applications in the firewall.

# firewall-cmd --list-service

Output:

ssh dhcpv6-client high-availability

Set password for the hacluster user. This user account is a cluster administration account. We suggest you set the same password for all nodes.

# passwd hacluster

Start the cluster service. Also, enable it to start automatically on system startup.

# systemctl start pcsd # systemctl enable pcsd

Remember to run the above commands on all of your cluster nodes.

Create a High Availability Cluster

Authorize the nodes using below command. Run the below command in any one of the nodes to authorize the nodes.

[root@node1 ~]# pcs cluster auth node1.itzgeek.local node2.itzgeek.local

Output:

Username: hacluster Password: << Enter Password node1.itzgeek.local: Authorized node2.itzgeek.local: Authorized

Create a cluster.

[root@node1 ~]# pcs cluster setup --start --name itzgeek_cluster node1.itzgeek.local node2.itzgeek.local

Output:

Destroying cluster on nodes: node1.itzgeek.local, node2.itzgeek.local... node1.itzgeek.local: Stopping Cluster (pacemaker)... node2.itzgeek.local: Stopping Cluster (pacemaker)... node2.itzgeek.local: Successfully destroyed cluster node1.itzgeek.local: Successfully destroyed cluster Sending 'pacemaker_remote authkey' to 'node1.itzgeek.local', 'node2.itzgeek.local' node1.itzgeek.local: successful distribution of the file 'pacemaker_remote authkey' node2.itzgeek.local: successful distribution of the file 'pacemaker_remote authkey' Sending cluster config files to the nodes... node1.itzgeek.local: Succeeded node2.itzgeek.local: Succeeded Starting cluster on nodes: node1.itzgeek.local, node2.itzgeek.local... node1.itzgeek.local: Starting Cluster (corosync)... node2.itzgeek.local: Starting Cluster (corosync)... node1.itzgeek.local: Starting Cluster (pacemaker)... node2.itzgeek.local: Starting Cluster (pacemaker)... Synchronizing pcsd certificates on nodes node1.itzgeek.local, node2.itzgeek.local... node1.itzgeek.local: Success node2.itzgeek.local: Success Restarting pcsd on the nodes in order to reload the certificates... node1.itzgeek.local: Success node2.itzgeek.local: Success

Enable the cluster to start at the system startup.

[root@node1 ~]# pcs cluster enable --all

Output:

node1.itzgeek.local: Cluster Enabled node2.itzgeek.local: Cluster Enabled

Use below command to get the status of the cluster.

[root@node1 ~]# pcs cluster status

Output:

Cluster Status: Stack: corosync Current DC: node2.itzgeek.local (version 1.1.19-8.el7_6.4-c3c624ea3d) - partition with quorum Last updated: Fri Jul 5 09:14:57 2019 Last change: Fri Jul 5 09:13:12 2019 by hacluster via crmd on node2.itzgeek.local 2 nodes configured 0 resources configured PCSD Status: node1.itzgeek.local: Online node2.itzgeek.local: Online

Run the below command to get detailed information about the cluster, including its resources, pacemaker status, and nodes details.

[root@node1 ~]# pcs status

Output:

Cluster name: itzgeek_cluster WARNINGS: No stonith devices and stonith-enabled is not false Stack: corosync Current DC: node2.itzgeek.local (version 1.1.19-8.el7_6.4-c3c624ea3d) - partition with quorum Last updated: Fri Jul 5 09:15:37 2019 Last change: Fri Jul 5 09:13:12 2019 by hacluster via crmd on node2.itzgeek.local 2 nodes configured 0 resources configured Online: [ node1.itzgeek.local node2.itzgeek.local ] No resources Daemon Status: corosync: active/enabled pacemaker: active/enabled pcsd: active/enabled

Fencing Devices

The fencing device is a hardware/software device which helps to disconnect the problem node by resetting node / disconnecting shared storage from accessing it. My demo cluster is running on top of VMware Virtual machine, so I am not showing you a fencing device setup, but you can follow this guide to set up a fencing device.

Cluster Resources

Prepare resources

Apache Web Server

Install Apache web server on both nodes.

# yum install -y httpd wget

Edit the configuration file.

# vi /etc/httpd/conf/httpd.conf

Add below content at the end of file on both cluster nodes.

<Location /server-status> SetHandler server-status Order deny,allow Deny from all Allow from 127.0.0.1 </Location>

Now we need to use shared storage for storing the web content (HTML) file. Perform below operation in any one of the nodes.

[root@node2 ~]# mount /dev/vg_apache/lv_apache /var/www/ [root@node2 ~]# mkdir /var/www/html [root@node2 ~]# mkdir /var/www/cgi-bin [root@node2 ~]# mkdir /var/www/error [root@node2 ~]# restorecon -R /var/www [root@node2 ~]# cat <<-END >/var/www/html/index.html <html> <body>Hello, Welcome!. This Page Is Served By Red Hat Hight Availability Cluster</body> </html> END [root@node2 ~]# umount /var/www

Allow Apache service in the firewall on both nodes.

# firewall-cmd --permanent --add-service=http # firewall-cmd --reload

Create Resources

Create a filesystem resource for Apache server. Use the storage coming from the iSCSI server.

# pcs resource create httpd_fs Filesystem device="/dev/mapper/vg_apache-lv_apache" directory="/var/www" fstype="ext4" --group apache

Output:

Assumed agent name 'ocf:heartbeat:Filesystem' (deduced from 'Filesystem')

Create an IP address resource. This IP address will act a virtual IP address for the Apache and clients will use this ip address for accessing the web content instead of individual node’s ip.

# pcs resource create httpd_vip IPaddr2 ip=192.168.1.100 cidr_netmask=24 --group apache

Output:

Assumed agent name 'ocf:heartbeat:IPaddr2' (deduced from 'IPaddr2')

Create an Apache resource which will monitor the status of the Apache server and move the resource to another node in case of any failure.

# pcs resource create httpd_ser apache configfile="/etc/httpd/conf/httpd.conf" statusurl="https://127.0.0.1/server-status" --group apache

Output:

Assumed agent name 'ocf:heartbeat:apache' (deduced from 'apache')

Since we are not using fencing, disable it (STONITH). You must disable to start the cluster resources, but disabling STONITH in the production environment is not recommended.

# pcs property set stonith-enabled=false

Check the status of the cluster.

[root@node1 ~]# pcs status

Output:

Cluster name: itzgeek_cluster

Stack: corosync

Current DC: node2.itzgeek.local (version 1.1.19-8.el7_6.4-c3c624ea3d) - partition with quorum

Last updated: Fri Jul 5 09:26:04 2019

Last change: Fri Jul 5 09:25:58 2019 by root via cibadmin on node1.itzgeek.local

2 nodes configured

3 resources configured

Online: [ node1.itzgeek.local node2.itzgeek.local ]

Full list of resources:

Resource Group: apache

httpd_fs (ocf::heartbeat:Filesystem): Started node1.itzgeek.local

httpd_vip (ocf::heartbeat:IPaddr2): Started node1.itzgeek.local

httpd_ser (ocf::heartbeat:apache): Started node1.itzgeek.local

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

Verify High Availability Cluster

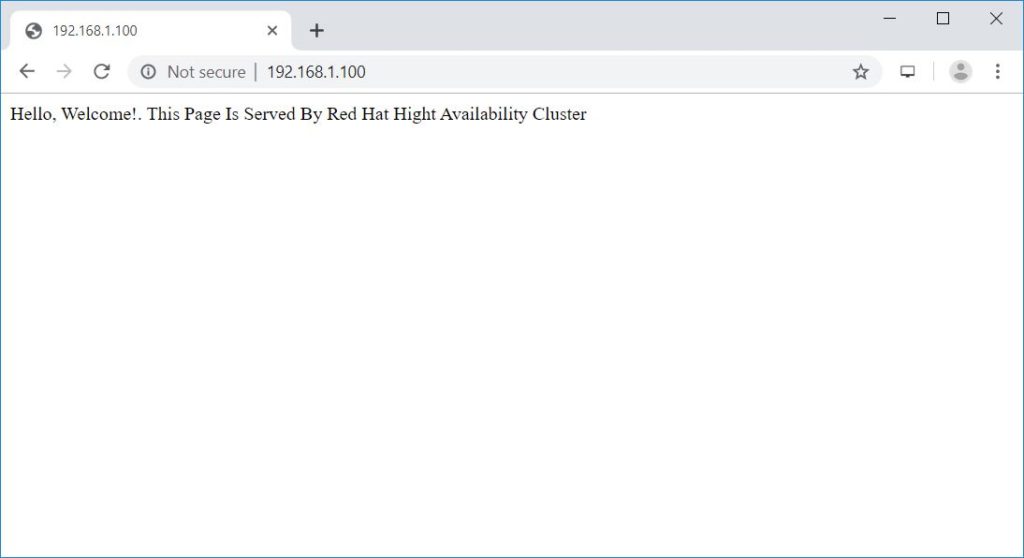

Once the cluster is up and running, point a web browser to the Apache virtual IP address. You should get a web page like below.

Test High Availability Cluster

Let’s check the failover of resource of the node by stopping the cluster on the active node.

[root@node1 ~]# pcs cluster stop node1.itzgeek.local

Conclusion

That’s All. In this post, you have learned how to setup a High-Availability cluster on CentOS 7. Please let us know your thoughts in the comment section.